Real-Time

Translucent Rendering Using GPU-based Texture Space Importance Sampling

Overview

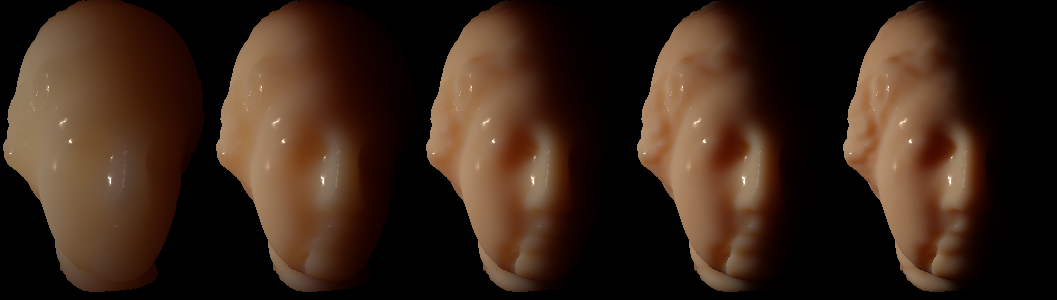

We present a novel approach for real-time rendering of translucent surfaces.

The computation of subsurface scattering is performed by first converting the

integration over the 3D model surface into integration over a 2D texture space and then applying importance sampling

based on the irradiance stored in the texture. Such a conversion leads to a

feasible GPU implementation and makes real-time frame rate possible. Our

implementation shows that

plausible images can be rendered in real time for complex translucent models

with dynamic light and material properties.

For objects with more apparent local effect, our approach generally requires

more samples that may downgrade the

frame rate. To deal with this case, we decompose the integration into two

parts, one for local effect and the other

for global effect, which are evaluated by a modified local subsurface scattering method and our texture space importance sampling,

respectively. Such a hybrid scheme is able to steadily render the translucent effect in real time with a fixed amount

of samples.

Publications

·

Chih-Wen Chang, Wen-Chieh Lin, Tan-Chi Ho,

Tsung-Shian Huang and Jung-Hong Chuang, Real-Time Translucent Rendering Using

GPU-based Texture Space Importance Sampling, Computer Graphics Forum (Eurographics 2008), Vol.

27, No. 2, 2008, pp 517-526.

Links to results

Comparison with Local Subsurface Scattering

Code

(note that the code is no longer maintained)