Research

|

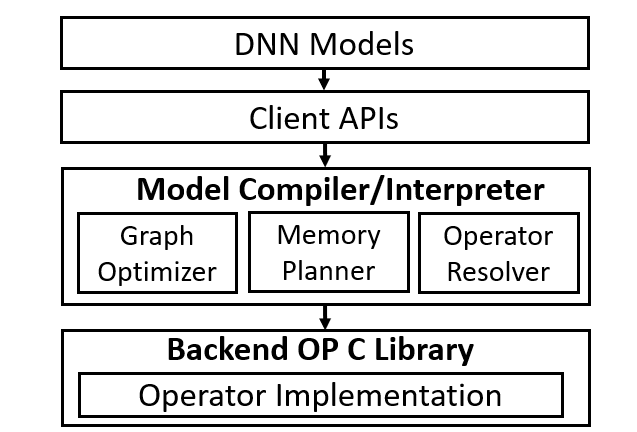

AI Compiler on Heterogeneous AI Accelerator This project aims to develop an AI compiler framework that conducts the graph-level optimization, operator scheduling, operator fusion, and memory allocation to improve the modern AI accelerators (GPU, NPU) consisting of different specialized engines. We prefer candidate students having knowledge on C/C++, CUDA, llvm, MLIR on this project. This project is supported by MOST [NeuralPS 23][HPCA 24][TECS 24] [HPCA 25] |

|

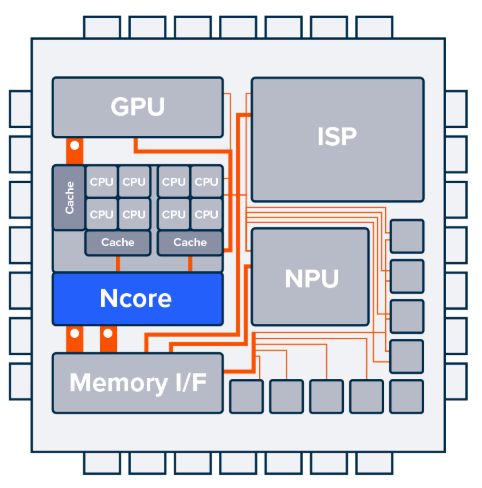

Energy-efficient Domain-Specific Accelerator Hardware Design This project aims to craft energy-efficient domain-specific hardware accelerator including neural processing unit (NPU), systolic array, and even GPU for the acceleration of LLM inference and Quantum Circuit Simulation, ray-tracing, and Post-quantum cryptography. This project pursues candidates with knowledge of digical logic design, VLSI, Verilog programming, RISC-V CPU and deep neural network. This project is supported by MOST, Google Research Grant |

|

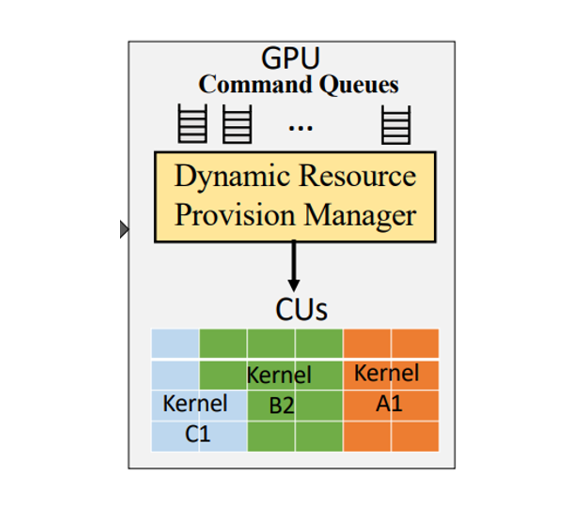

High-Performance GPU Architecture and Compilation This project aims to optimize the performance of modern GPU architecture and programming models. This project will design new ray tracing accelerator, and craft high-performance programming models on deep neural network workloads. This project pursues candidates with the knowledge of the GPU, CUDA, Triton, PyTorch, C/C++, computer graphics, and deep neural network. This project is supported by MediaTek and MOST. [HPCA 21] [ASP-DAC 22] [ASP-DAC 23] [ISCA 25] |

|

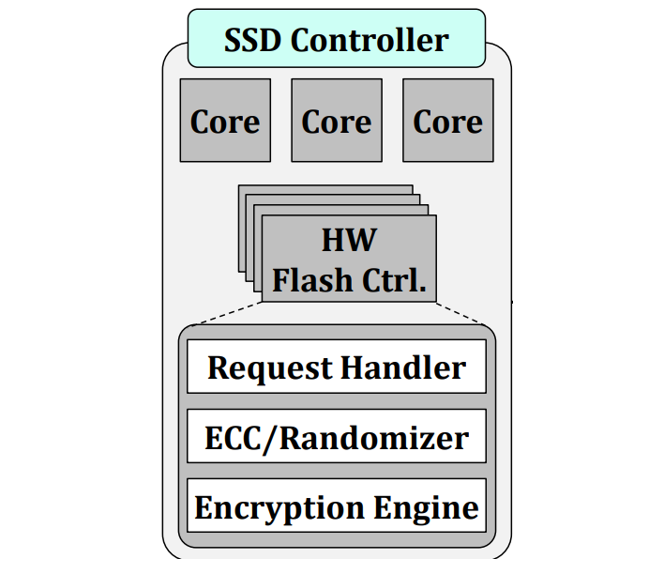

AI for EDA and Robotic This project pursues candidates who want to know the way to generate RTL and circuit validation through AI and robotic control system/OS/middleware on embedded low-power CPU/NPU. |